KL divergence asymmetry pic.twitter.com/bvOAyCIMHw

— Ari Seff (@ari_seff) September 9, 2020

Author: samuel.cheng@ou.edu

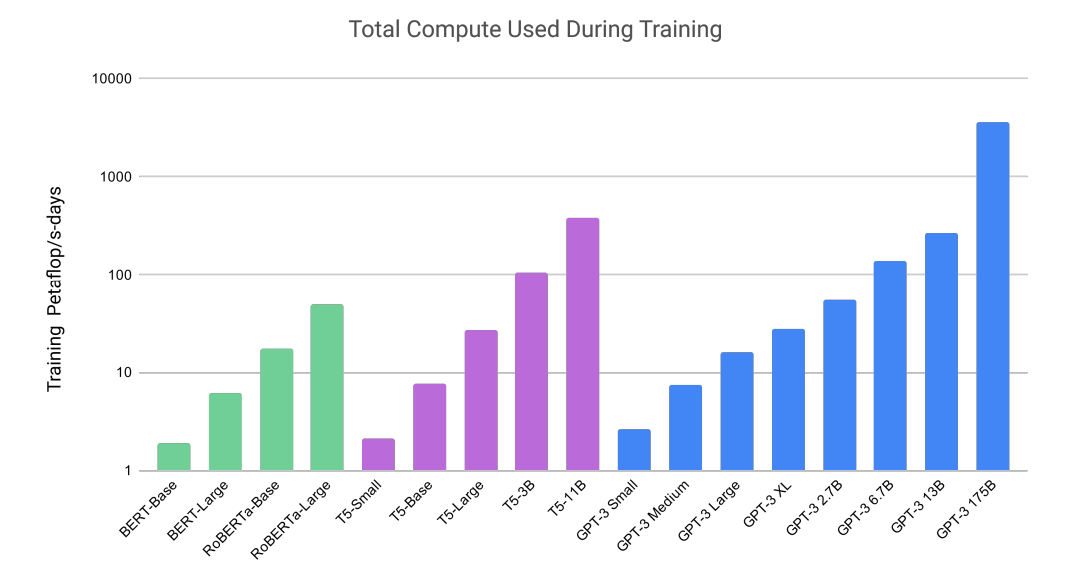

OpenAI released its latest language model. I found the training compute comparison facinating (~1000x BERT-base). The large model has 175B parameters. And some said it costed $5M to train. While it definiitely is impressive, I agree with Yannic that probably no “reasoning” is involved. It is very likely that the model “remembers” the data somehow and “recalls” the best matches from all training data.

The idea of contrastive learning has been around for a while. It was introduced for unsupervised/semi-supervised learning. When we only have unlabelled data, we would like to train a representation that groups similar data together. The way to do that in contrastive learning is to introduce perturbation to a target sample to generate positive samples. The perturbations can be translation, rotation, etc. And then we can treat all other samples as negative samples. The goal is to introduce a contrastive loss that pushs negative samples away from the target sample and pull the positive samples towards the target sample.

Even for the case of supervised learning, the contrastive learning step can be used as a pre-training step to train the entire network (excluding the last classification layer). After the pretraining, we can train the last layer with labelled data while keeping all the other layers fixed.

The main innovation in this work is that rather than treating all other samples as negative samples. It treats data with the same labels as positive samples as well.

Motivation

- Want to verify if someone has used your dataset for training

Idea

- Introduce extra (unreal) feature to data. For example, cat feature to cat image, dog feature to dog image

- Verify if dataset was used with hypothesis testing

Comments

- It seems that it is training and testing the same classifier. If a different classifier is used to the marked data, not sure if the method will actually work

- The “radioactive” images actually look very bad

- The idea does not seem to be new. And the execution is quite doubtful. It reminds me watermarking techniques popular in early 2000 but it never seems to take off since it doesn’t really work.

Ref

Motivation:

- Most ML moderns become statistics once they are trained. Or one has to retrain the model to keep it adaptive to the new environment.

Main idea:

- Instead of updating weights directly, update the rules (determined by the Hebbian coefficients) that update the weights

Some details:

- Weights are updated by Hebbian ABCD model, $latex \Delta w_{i,j} = \eta_w (A_w o_i o_j + B_w o_i +C_w o_j + D_w)$

- Let $latex bf h$ be the vectorize Hebbian coefficients, $latex {\bf h}_{t+1} \leftarrow h_t + \frac{\alpha} {n \sigma} \sum_{i=1}^n F_i ({\bf h}_t + \Delta {\bf h}_i) $, where $latex \Delta {\bf h}_i \sim \mathcal{N} ({\bf 0}, \sigma{\bf I})$ and $latex F_i$ is a fitnness evalution of $latex {\bf h}_t + \Delta {\bf h}_i$ (This I am not completely certained how it is evaluated)

Ref: video, paper, twitter posts

Problem statement:

- Transformer is computationally expensive when we have too many keys and queries (need to compute dot product between each pair). It can be memory intensive as well depending on implementations.

Proposed solution:

- Group keys and queries into “buckets” first and only compute dot product of keys and queries in the same bucket.

- Bucket can be implemented as a form of “locality sensitive hashing”. Seriously, I always forget what LSH means. Can they create an even more obscure jargon? (fairly speaking, I think they definitely could)

- I think a simple example to under LSH is the binary case. Assumes two binary vector is close according to the Hamming distance. Then, for a say length-100 binary vector, we can sort them into 4 bins just according to the value of the first two bits. Actually, it is just coset and syndrome coding but with the parity check matrix “degenerated” (care nothing but just for the first two bits). If we assume the bits are independent, this degenerated choice is okay. Otherwise, we can just use a random coset as in Slepian Wolf coding.

- For the reformer case, LSH is implemented as projections of the keys and queries onto random vectors. The signs of the resulting projections will determine the bucket. This is exactly the generalization Slepian Wolf coding to continuous case.

- Since they don’t want to store the bucket projection vectors, they make the step reversible instead. It is basically the same as the lifting scheme in wavelet construction.

Being a Matlab long-term user, I have almost switched to Python completely. But I wasn’t paying attention of different reshape behavior of numpy vs Matlab. It wasted me a night to catch a nasty bug because of that. I always assumed that when I “vectorize” a matrix, it will expand along the row index first as in Matlab. It turns out that numpy’s default behavior is to expand the column index first. This is known as the ‘C’ order vs the Fortran order in Matlab. To override the default behavior, simply set order to ‘F’ in the argument. For example,

z = x.reshape(3,4,order='F')Watched the first lecture of underactuated robotics by Prof Tedrake. It was great. His lecture note/book is available online. And the example code is directly available at colab.

So what is underactuated robotics? Consider a standard manipulator equation with state $latex q$

$latex M(q) \dot{q}+C(q,\dot{q}) \dot{q} = \tau_g(q) + B(q) u,$

where L.H.S. are the force terms, R.H.S. are the “Ma” terms, $M(q)$ is mass/inertia matrix and positive definite, $latex u$ is the control input, and $latex B(q)$ maps the control input to $latex q$.

We can rearrange the above to

$latex \ddot{q}= M(q)^{-1} [ \tau_g(q) + B(q) u – C(q,\dot{q} )\dot{q}] =\underset{f_1(q,\dot{q})}{\underbrace{M(q)^{-1}[ \tau_g(q) – C(q,\dot{q} )\dot{q}]}} +\underset{f_2(q,\dot{q})}{\underbrace{M(q)^{-1} B(q) }}u .$

Note that if $latex f_2(q,\dot{q})$ has full row rank (or simply $latex B(q)$ has full row rank since $latex M(q)$ is positive definite and hence full-rank), then for any desired $latex \ddot{q}^d$, we can achieve that by picking $latex u$ as

$latex u = f_2^{\dagger} (q,\dot{q}) (\ddot{q}^{d} – f_1(q,\dot{q})),$ where $latex f_2^{\dagger}$ is the pseudo-inverse of $latex f_2$. We say such robotic system is fully actuated.

On the other hand, if $latex f_2(q,\dot{q})$ does not have full row rank, the above trivial controller will not work. We then have a much more challenging and interesting scenario. And we say the robotic system is underactuated.